Measuring disparate outcomes of content recommendation algorithms with distributional inequality metrics

The bigger picture

In recent years, many examples of the potential harms caused by machine learning systems have come to the forefront. Practitioners in the field of algorithmic bias and fairness have developed a suite of metrics to capture one aspect of these harms: namely, differences in performance between different demographic groups and, in particular, worse performance for marginalized communities. Despite great progress made in this area, one open question became particularly prominent for industry practitioners: how do you capture such disparities if you do not have reliable demographic data or choose not to collect them due to privacy concerns? You have probably heard statistics like “the top 1% of people own X% share of the wealth,” and this work applies those notions to levels of engagement on Twitter. We use inequality metrics to understand exactly how skewed engagements are on Twitter and dig deeper to isolate some of the algorithms that may be driving that effect.

Highlights

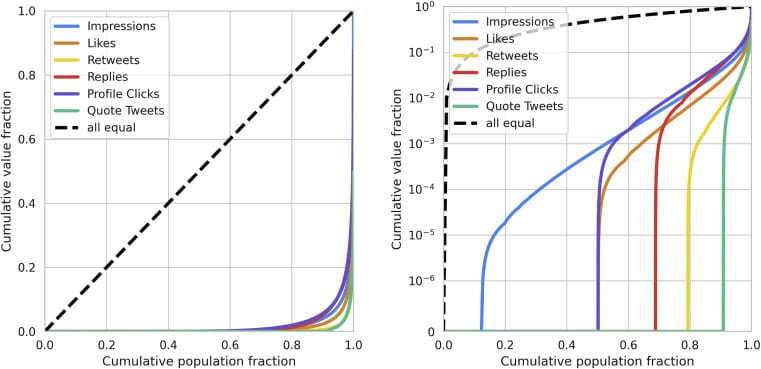

- In this dataset, we find the top 1% of authors receive ∼80% of all views of Tweets

- Inequality metrics work well for understanding disparities in outcomes on social media

- Out-of-network impressions are more skewed than in-network impressions•The top X% share metrics perform the best relative to our criteria

Summary

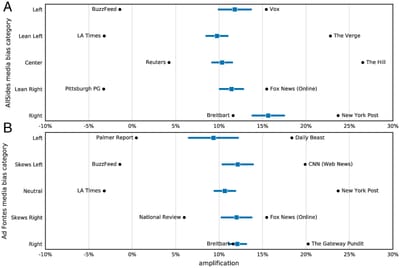

The harmful impacts of algorithmic decision systems have recently come into focus, with many examples of machine learning (ML) models amplifying societal biases. In this paper, we propose adapting income inequality metrics from economics to complement existing model-level fairness metrics, which focus on intergroup differences of model performance. In particular, we evaluate their ability to measure disparities between exposures that individuals receive in a production recommendation system, the Twitter algorithmic timeline. We define desirable criteria for metrics to be used in an operational setting by ML practitioners. We characterize engagements with content on Twitter using these metrics and use the results to evaluate the metrics with respect to our criteria. We also show that we can use these metrics to identify content suggestion algorithms that contribute more strongly to skewed outcomes between users. Overall, we conclude that these metrics can be a useful tool for auditing algorithms in production settings.

Read the full research report

Publication details

Authors: Tomo Lazovich, Luca Belli, Aaron Gonzales, Amanda Bower, Uthaipon Tantipongpipat, Kristian Lum, Ferenc Huszar, Rumman Chowdhury

Publication date: 2022/8/12

Journal: Patterns Volume 3 Issue 8

Publisher: Elsevier

Subscribe to my newsletter to get the latest updates and news